Content Credentials for AI-Generated Images: A Digital Nutrition Label for the AI Era

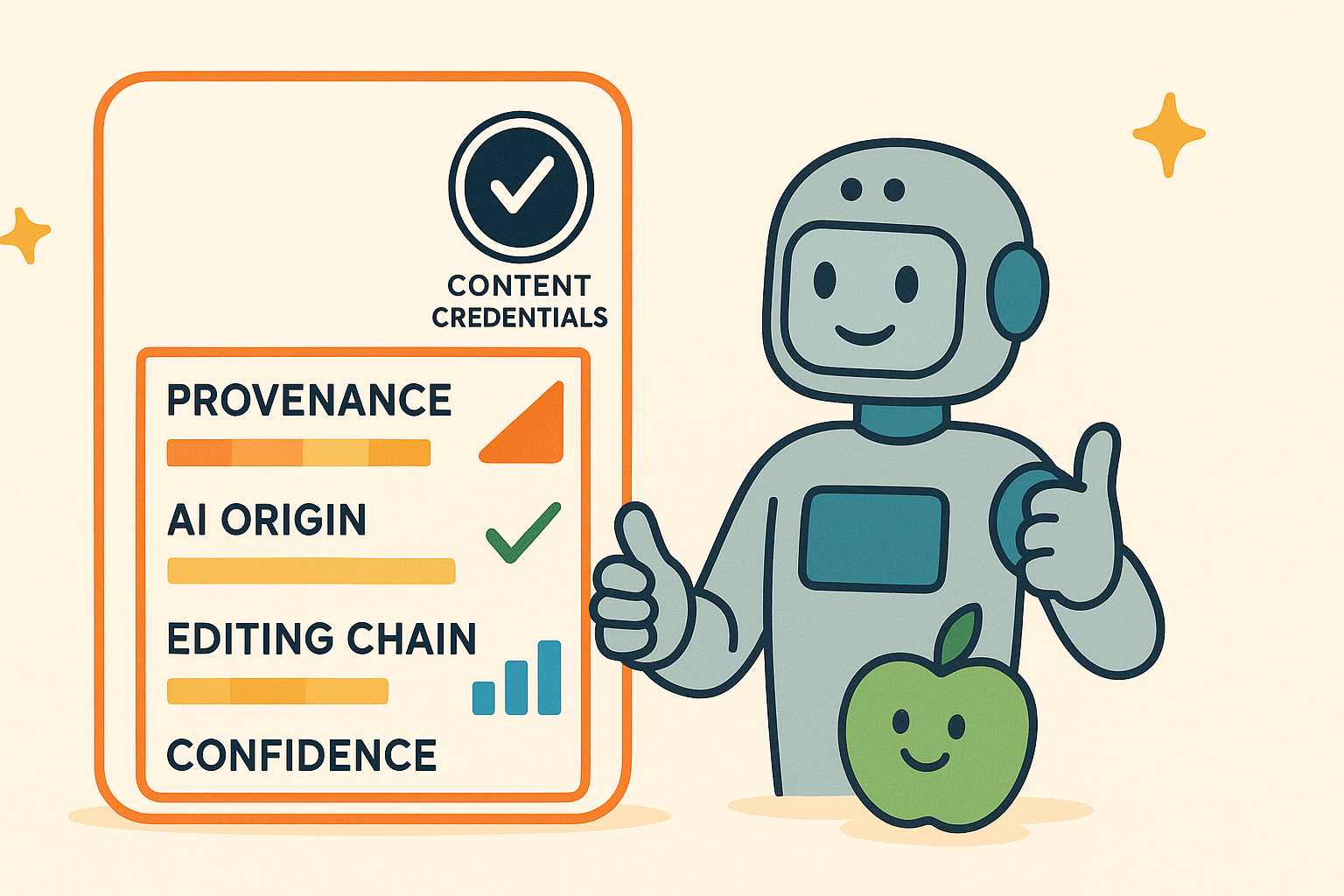

As AI-generated images become indistinguishable from authentic photographs, millions are searching for ways to detect AI images and verify content authenticity. Enter Content Credentials—a groundbreaking technology that works like a digital nutrition label for online media, offering a solution to the growing challenge of AI image detection.

How to Detect AI-Generated Images with Content Credentials

Think of Content Credentials as a tamper-proof detection system for AI-generated content and deepfakes. Every image carries cryptographically signed metadata revealing its creation story—who made it, what tools were used, and crucially, whether AI was involved in its generation. This advanced AI watermarking technology goes beyond simple fake image detection; it's built on the Coalition for Content Provenance and Authenticity (C2PA) framework, backed by tech giants including Adobe, Microsoft, Google, and major camera manufacturers.

The system combines three layers of protection for comprehensive AI image detection: secure metadata that can't be forged, invisible digital watermarks embedded in the content itself, and unique digital fingerprints that survive compression and format changes. Together, these create what experts call "Durable Content Credentials"—image authenticity verification that sticks with content through its entire lifecycle, making it one of the most reliable AI detection tools available today.

AI Image Detection Tools Already Using This Technology

The adoption of Content Credentials as an AI detection solution is accelerating rapidly. Adobe Firefly automatically tags all its AI creations with Content Credentials, including detailed information about generation and subsequent edits—essentially providing built-in AI transparency for every image. OpenAI followed suit, embedding C2PA metadata in every DALL-E 3 image, complete with visible AI watermarks and creation timestamps.

Microsoft's Azure OpenAI services have integrated the standard across their platform, while Meta has rolled out "AI info" labels on Facebook and Instagram that detect and display Content Credentials. Google recently joined the C2PA steering committee and is implementing the technology in Google Search, Ads, and even the upcoming Pixel 10 smartphone cameras.

The momentum extends beyond Silicon Valley. Stability AI has added invisible watermarks to its API outputs, Shutterstock announced integration plans for its AI generator, and TikTok became the first major video platform to adopt the standard.

Hardware Manufacturers Join the Movement

Camera companies aren't sitting on the sidelines. Leica's M11-P and SL3-S models already support native Content Credentials, capturing provenance data at the moment of creation. Sony has updated firmware for its A9 III, A1, and A7S III cameras to include the technology. Nikon has demonstrated prototypes with the Z9, while Canon and Fujifilm have announced forthcoming support.

This hardware integration matters because it establishes trust from the very first moment content is created. When a photojournalist captures an image with a Content Credentials-enabled camera, that photo carries verifiable proof of authenticity from shutter click to publication.

The Road Ahead: 2025 and Beyond

The next two years promise significant developments. Samsung's Galaxy S25 series and Google's Pixel 10 will bring Content Credentials to mainstream smartphones. Apple has announced that its Image Playground will embed identifying metadata in all AI-generated content. Even Cloudflare has committed to preserving Content Credentials through their image processing and CDN services.

Government agencies are pushing adoption forward too. The National Security Agency, along with international cybersecurity partners, recently released guidance encouraging widespread implementation across critical sectors. The upcoming C2PA 2.1 specification promises enhanced watermarking capabilities, making credentials even more resistant to tampering.

Real Challenges in Detecting AI-Generated Content

Despite the momentum in AI image detection technology, significant hurdles remain. The technology requires universal adoption to be truly effective—a single platform that strips metadata can break the entire provenance chain. Most social media platforms still remove metadata during upload, though this is slowly changing as demand for fake image detection and deepfake detection grows.

Implementation complexity poses another challenge. Different manufacturers' systems don't always play nicely together, creating interoperability headaches. Perhaps most critically, success depends on users actually understanding and checking these credentials—a massive education challenge that the industry has barely begun to address.

What This Means for AI Transparency and Digital Trust

For content creators seeking to verify authentic images, Content Credentials offer unprecedented control over attribution and authenticity. Photographers can prove their work is genuine, while AI artists can be transparent about their creative process. News organizations can detect AI-generated images and verify the source of user-submitted content, potentially reducing the spread of misinformation and synthetic media.

Consumers gain something equally valuable: the ability to detect fake images and AI-generated content instantly. Just as nutrition labels help us make decisions about food, Content Credentials let us evaluate the media we consume. You can verify whether that viral image was captured by a photojournalist or generated by AI, whether a political video was edited or presented raw—essentially serving as a free AI image detection tool built into the content itself.

How to Start Using AI Detection Tools Today

Organizations preparing for this shift in image authenticity verification should start by upgrading to Content Credentials-compatible software and hardware. Adobe's Creative Cloud already supports the standard across Photoshop, Lightroom, and Premiere Pro, providing integrated AI detection capabilities. Implement workflows that preserve digital watermarking and metadata throughout your content pipeline. Most importantly, start educating your team about why image provenance and AI transparency matter.

For individuals wondering how to detect AI-generated images, familiarize yourself with the Content Credentials verification tools available today. These free AI detection tools let you check any image for embedded credentials. Look for the Content Credentials icon on images you encounter online. As more platforms adopt the standard, checking for AI watermarks and verifying authentic images will become as routine as glancing at a website's security certificate.

The Future of AI Image Detection and Digital Authenticity

Content Credentials represent more than just another AI detection tool—they're a fundamental shift in how we establish trust and detect fake images online. As AI capabilities advance and synthetic media becomes more sophisticated, this technology provides an essential foundation for digital authenticity and deepfake detection.

We're witnessing the early stages of a transparency revolution in AI-generated content detection. While perfect implementation remains years away, the trajectory is clear: every piece of digital content will eventually carry its own verifiable history, making it easy to detect AI images and verify authentic content. In an era where seeing is no longer believing, Content Credentials offer something invaluable—reliable AI image detection that tells us exactly what we're looking at.

The question isn't whether this AI transparency technology will become standard, but how quickly we can build the infrastructure to support widespread fake image detection and authentication. As creators, platforms, and consumers, we all have a role in shaping this more transparent digital future where detecting AI-generated images becomes second nature. The digital nutrition label for AI content has arrived—now it's up to us to read it.